1. (50 min) What's new in ClickHouse 23.3.

2. (10 min) Q&A.

ClickHouse Spring Release.

— 22 new features 🌳

— 14 performance optimizations 🛼

— 61 bug fixes 🦋

Did you know?

When you DROP table in ClickHouse, the table is not instantly removed

— because ClickHouse uses MVCC for atomic DDL operations;

— it allows queries to continue running from a dropped table,

and creating another table in place of a dropped table;

Just in case, tables are physically removed only after 8 minutes.

Why don't allow restoring dropped tables?

SELECT * FROM system.dropped_tables

— info about the recently dropped tables;

UNDROP TABLE my_table

— restore the recently dropped table;

Developer: chen (xiedeyantu).

The settings from the global server config:

SELECT * FROM system.server_settings

Demo

Developer: Artem Brustovetskii.

ATTACH TABLE uk_price_paid UUID 'cf712b4f-2ca8-435c-ac23-c4393efe52f7'

(

price UInt32,

date Date,

postcode1 LowCardinality(String),

postcode2 LowCardinality(String),

type Enum8('other' = 0, 'terraced' = 1, 'semi-detached' = 2, 'detached' = 3, 'flat' = 4),

is_new UInt8,

duration Enum8('unknown' = 0, 'freehold' = 1, 'leasehold' = 2),

addr1 String,

addr2 String,

street LowCardinality(String),

locality LowCardinality(String),

town LowCardinality(String),

district LowCardinality(String),

county LowCardinality(String)

)

ENGINE = MergeTree

ORDER BY (postcode1, postcode2, addr1, addr2)

SETTINGS disk = disk(

type = cache,

path = '/home/milovidov/.cache/clickhouse-local/',

max_size = '10G',

disk = disk(

type = web,

endpoint = 'https://raw.githubusercontent.com/ClickHouse/web-tables-demo/main/web/'));

Developer: Kseniia Sumarokova.

ClickHouse has support for MySQL wire protocol.

mysql --port 9004 --host 127.0.0.1 --user default

And for PostgreSQL wire protocol as well.

ClickHouse's SQL dialect is different.

The set of SQL functions is also different.

SELECT INSTR('ClickHouse', 'lick');

SELECT 'ClickHouse' REGEXP 'M?ouse$';

SELECT STR_TO_DATE('03/03/2023', '%m/%d/%Y');

This is compatible with MySQL, but ugly.

Developer: Robert Schulze, Flynn.

SELECT positionCaseInsensitive('ClickHouse', 'lick');

SELECT match('ClickHouse', 'M?ouse$');

SELECT toDateTime('2023-03-30 01:02:03');

SELECT parseDateTime('03/03/2023', '%m/%d/%Y');

This is better.

parseDateTime

parseDateTimeOrZero

parseDateTimeOrNull

SELECT parseDateTime('2023/03/30 01:02', '%Y/%m/%d %H:%M')

parseDateTimeInJodaSyntax

parseDateTimeInJodaSyntaxOrZero

parseDateTimeInJodaSyntaxOrNull

SELECT parseDateTimeInJodaSyntax('2023/03/30 01:02', 'yyyy/MM/dd HH:mm')

Developer: Robert Shulze.

Control over compression method:

SET output_format_parquet_compression_method

= 'snappy|lz4|brotli|zstd|gzip|none';

Control over format version:

SET output_format_parquet_version = '2.6'

Supported versions are: 1.0, 2.4, 2.6, 2.latest.

Developer: Pavel Kruglov.

config.yaml for clickhouse-client:

openSSL:

client:

certificateFile: 'path.cert'

privateKeyFile: 'path.key'

Developer: Nikolai Degterinskiy.

Next steps: authentication with SSH keys?

Lightweight DELETEs are production ready

and enabled by default in 23.3 LTS.

DELETE FROM t WHERE ...

Developer: Alexander Gololobov.

Next steps: lightweight updates?

Marks take 3..6 times less space in RAM!

Enabled by default.

Demo.

Developer: Michael Kolupaev.

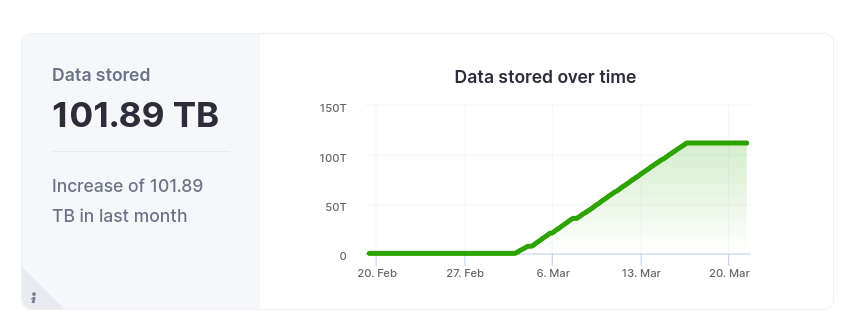

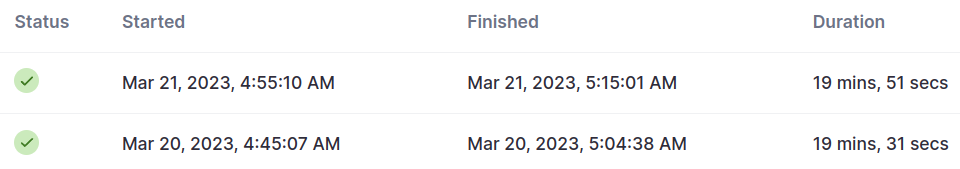

Full 100 TB and 1 000 000 files backup in one hour.

Incremental 100 TB backups in 20 minutes.

— server-size copies for S3;

— dedicated thread pool;

— increased parallelism;

— faster metadata writing;

— retries of Keeper operations;

Developers: Vitaly Baranov, Nikita Mikhailov, Alexey Milovidov.

Without parallel replicas:

SELECT count() FROM github_events WHERE body ILIKE '%ClickHouse%';

Elapsed: 458.332 sec. 1.18 TB (2.58 GB/s.)

With 10 parallel replicas:

SET allow_experimental_parallel_reading_from_replicas = 1,

max_parallel_replicas = 10;

SELECT count() FROM github_events WHERE body ILIKE '%ClickHouse%';

Elapsed: 40.284 sec. 1.18 TB (29.34 GB/s.)

With 100 parallel replicas:

Elapsed: 8.790 sec. 1.18 TB (134.44 GB/s.)

Developer: Nikita Mikhailov.

SET max_parallel_replicas = 100,

parallel_replicas_custom_key = 'UserID',

parallel_replicas_custom_key_filter_type = 'default';

-- parallel_replicas_custom_key_filter_type = 'range'

Represents every replica as a shard for distributed queries

by pre-filtering data by remainder of division or a range.

Useful for large JOIN or GROUP BY with

the distributed_group_by_no_merge setting.

Developer: Antonio Andelic.

SET partial_result_on_first_cancel = 1;

After query cancellation: stop reading the data

but get the query result, as all the data is processed.

Demo

Developer: Alexey Perevyshin.

CREATE TEMPORARY TABLE tmp_uk_price_paid

(

...

)

ENGINE = MergeTree

ORDER BY (postcode1, postcode2, addr1, addr2)

What for?

Developer: Roman Vasin.

Superset:

— native support for ClickHouse;

— ships ClickHouse support out-of-the-box!

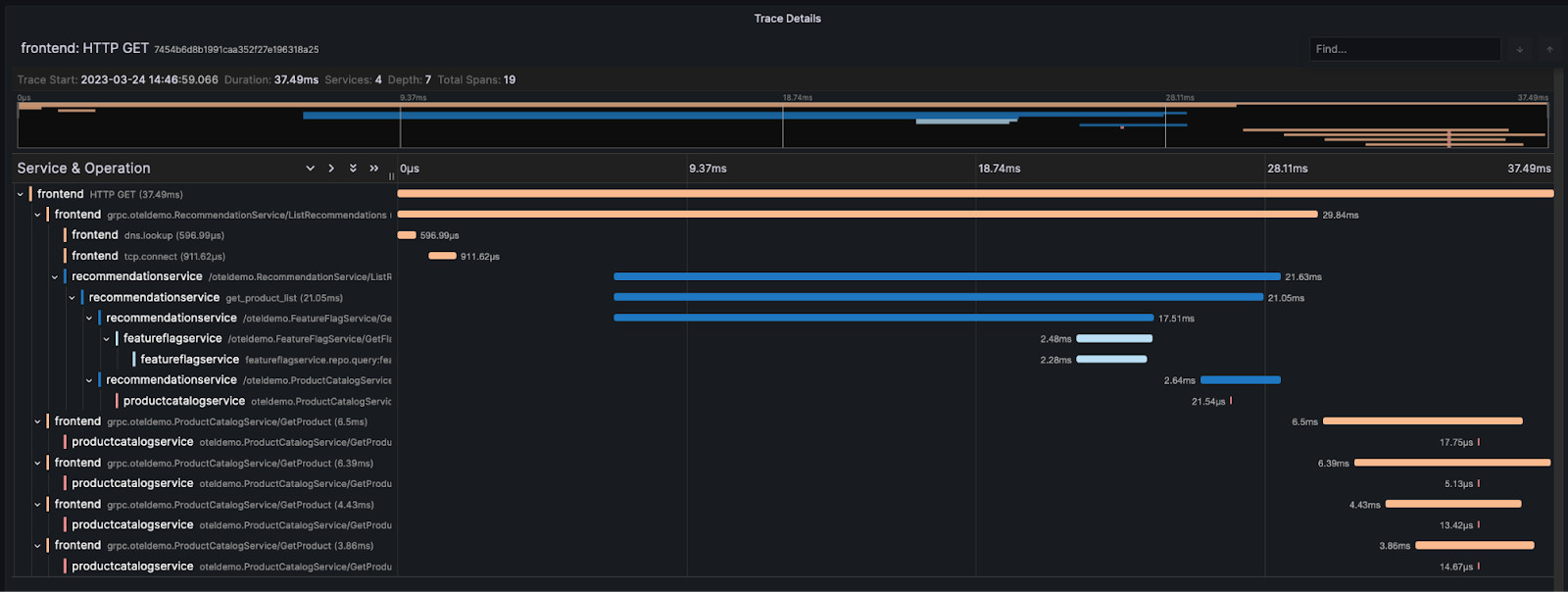

Grafana:

— support for traces visualization;

Updates for Python, Node.js, Go, and Java drivers.

And if Grafana is not enough for you, you can use QRYN:

— polyglot monitoring and observability (qryn.dev).

— free 30-day trial with $300 credits up to 10 TB of data;

— affordable clusters for developers with full HA < $100/month

Try it! https://clickhouse.cloud/.