1. (30 min) What's new in ClickHouse 22.5.

2. (30 min) Q&A.

May's stable release.

GROUP BY modifiers:

— WITH TOTALS;

— WITH ROLLUP;

— WITH CUBE;

— GROUPING SETS;

Developers: Dmitriy Novik, taylor12805, Maksim Sipliviy.

GROUP BY modifiers:

(demo)

— WITH TOTALS:

add a row with total values;

— WITH ROLLUP;

aggregate by all prefixes of keys:

given k1, k2, aggregate by (), (k1), (k1, k2);

— WITH CUBE;

aggregate by all subsets of keys;

given k1, k2, aggregate by (), (k1), (k2), (k1, k2);

— new: GROUPING SETS;

aggregate by any sets of keys;

generalization of all the above;

Upcoming:

— GROUPING function to distinguish different sets.

— rollup_use_null setting for standard compatibility.

MySQLDump as input format:

--

-- Table structure for table `test`

--

DROP TABLE IF EXISTS `test`;

/*!40101 SET @saved_cs_client = @@character_set_client */;

/*!50503 SET character_set_client = utf8mb4 */;

CREATE TABLE `test` (

`x` int DEFAULT NULL,

`y` int DEFAULT NULL

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci;

/*!40101 SET character_set_client = @saved_cs_client */;

--

-- Dumping data for table `test`

--

LOCK TABLES `test` WRITE;

/*!40000 ALTER TABLE `test` DISABLE KEYS */;

INSERT INTO `test` VALUES (1,NULL),(2,NULL),(3,NULL),(3,NULL),(4,NULL),

(5,NULL),(6,7);

/*!40000 ALTER TABLE `test` ENABLE KEYS */;

UNLOCK TABLES;

MySQLDump as input format:

Use case:

— you cannot connect to MySQL for direct import;

— you cannot create a TSV dump from MySQL;

— all that you have is a table dump from MySQL;

Developer: Pavel Kruglov.

MySQLDump as input format:

Options:

— input_format_mysql_dump_table_name

— select one of the tables from the dump;

— input_format_mysql_dump_map_column_names

— match corresponding column names;

Bonus:

— MySQLDump format supports schema inference!

SELECT * FROM file('dump.sql', MySQLDump);

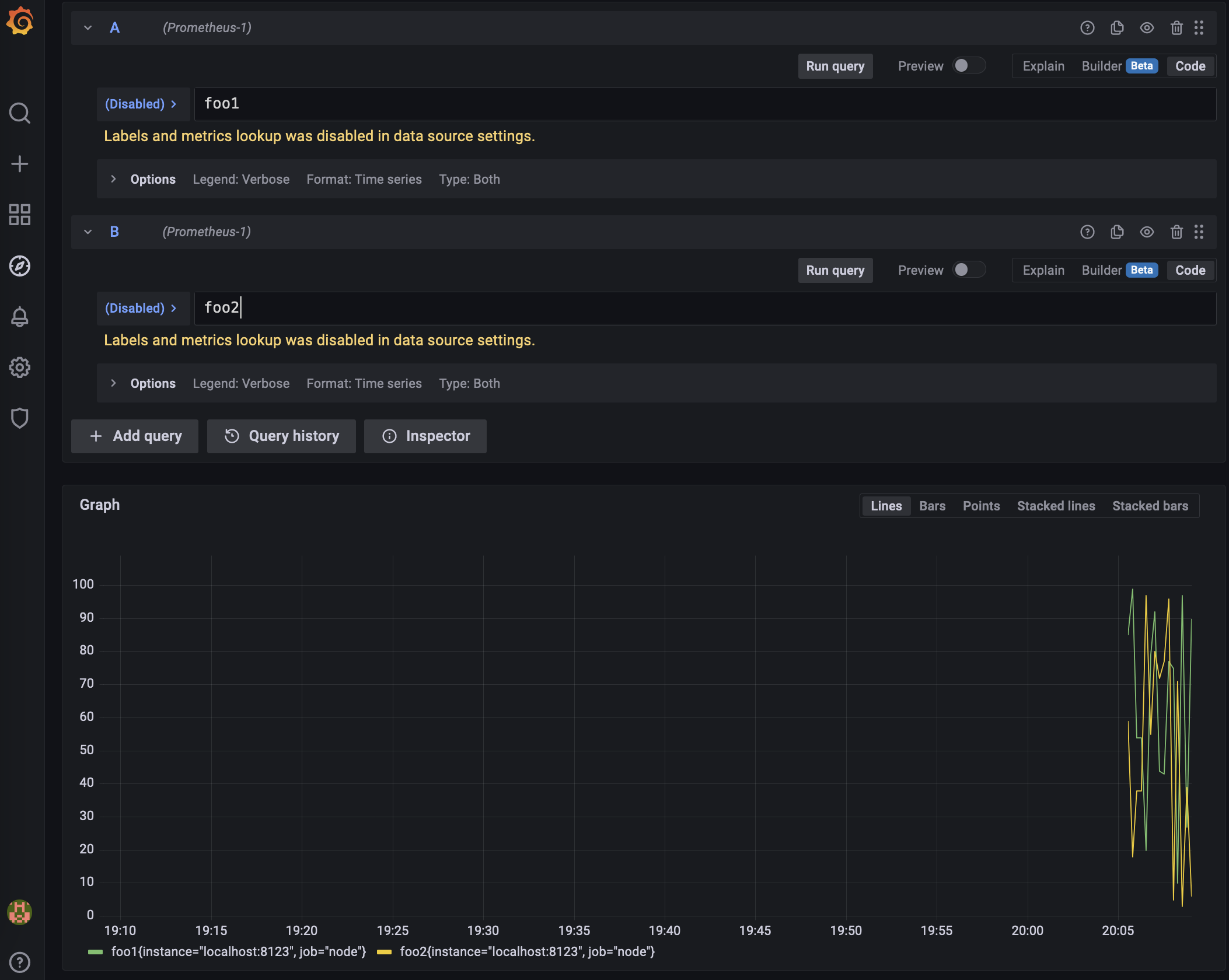

Prometheus as output format

Use case:

— output data exactly as Prometheus expects;

— define a custom HTTP handler for a query with custom metrics;

Developer: Vladimir Cherkasov.

Prometheus as output format

Result should have columns:

— name;

— value;

— type (counter, gauge, histogram, summary, untyped or empty);

— labels (optional);

— help (optional);

Prometheus as output format

# HELP metric0 info 0

# TYPE metric0 counter

metric0 0

metric1 1

# HELP metric2 info 2

metric2 2 1395066363000

# TYPE metric3 counter

metric3 3

Bonus:

— histograms are supported.

Prometheus as output format

global:

scrape_interval: 3s

scrape_configs:

- job_name: node

metrics_path: '/'

params:

query: ["SELECT ... FORMAT Prometheus"]

static_configs:

- targets: ['localhost:8123']

FIPS 140-2 compliance:

— "crypto" module of the SSL library (BoringSSL)

is switched to FIPS 140-2 compliant version.

Developer: Meena-Renganathan.

system.certificates table:

— introspection of the root certificates that ClickHouse can use.

— check that no malicious certificates are used;

check issues with SSL certificates.

Developer: Yakov Olkhovskiy.

Previous versions:

— rigid max_memory_usage, set to 10 GB by default;

— max_memory_usage_for_user can be set;

— when memory limit exceeded, exception is thrown;

Since 22.5:

— max_memory_usage is not set by default;

— new settings memory_overcommit_ratio_denominator, memory_overcommit_ratio_denominator_for_user;

Developers: Dmitriy Novik.

Since 22.5:

— max_memory_usage is not set by default;

— new settings memory_overcommit_ratio_denominator,

memory_overcommit_ratio_denominator_for_user;

— in case of memory shortage,

the most "overcommitted" query will be killed;

— other queries will wait for up to

memory_usage_overcommit_max_wait_microseconds;

— max_memory_usage and max_memory_usage_for_user

can act as hard limits;

Developers: Dmitriy Novik.

TLDR:

Heavy query can use as much memory as available

as long as no smaller queries need memory.

Developers: Dmitriy Novik.

avg and sum without GROUP BY:

SELECT sum(revenue), avg(price) FROM table

Up to 1.5 times faster!

Developers: Maksim Kita.

Unary arithmetic functions

bitCount, bitNot, abs, sign, intExp*, negate, round*:

Up to 7 times faster!!!

Developers: Maksim Kita.

JIT compilation for sorting comparator.

For ORDER BY, INSERT INTO MergeTree, Merge JOIN.

SELECT * FROM test

ORDER BY value

— 0 rows in set. Elapsed: 5.299 sec. Processed 100.00 million rows, 1.70 GB (18.87 million rows/s., 320.81 MB/s.)

SET compile_sort_description=1;

SELECT * FROM test

ORDER BY value

— 0 rows in set. Elapsed: 4.131 sec. Processed 100.00 million rows, 1.70 GB (24.21 million rows/s., 411.50 MB/s.)

— +30% performance improvement!

Developers: Maksim Kita.

Indices for vector embeddings.

Search for nearest objects: images, texts, etc...

FAISS, Annoy, HNSW, DiskAnn.

Prototype stage, expected in Autumn 2022.

Already tested by first customers!

Sign up for wait-list: https://clickhouse.com/cloud/

Serverless operation, no infrastructure to manage.

Roadmap 2022 is published:

https://github.com/ClickHouse/ClickHouse/issues/32513

— open for discussions.

... all great stuff is discussed there, don't miss.